ISI MStat PSB 2009 Problem 4 | Polarized to Normal

This is a very beautiful sample problem from ISI MStat PSB 2009 Problem 4. It is based on the idea of Polar Transformations, but need a good deal of observation o realize that. Give it a Try it !

Problem- ISI MStat PSB 2009 Problem 4

Let \(R\) and \(\theta\) be independent and non-negative random variables such that \(R^2 \sim {\chi_2}^2 \) and \(\theta \sim U(0,2\pi)\). Fix \(\theta_o \in (0,2\pi)\). Find the distribution of \(R\sin(\theta+\theta_o)\).

Prerequisites

Convolution

Polar Transformation

Normal Distribution

Solution :

This problem may get nasty, if one try to find the required distribution, by the so-called CDF method. Its better to observe a bit, before moving forward!! Recall how we derive the probability distribution of the sample variance of a sample from a normal population ??

Yes, you are thinking right, we need to use Polar Transformation !!

But, before transforming lets make some modifications, to reduce future complications,

Given, \(\theta \sim U(0,2\pi)\) and \(\theta_o \) is some fixed number in \((0,2\pi)\), so, let \(Z=\theta+\theta_o \sim U(\theta_o,2\pi +\theta_o)\).

Hence, we need to find the distribution of \(R\sin Z\). Now, from the given and modified information the joint pdf of \(R^2\) and \(Z\) are,

\(f_{R^2,Z}(r,z)=\frac{r}{2\pi}exp(-\frac{r^2}{2}) \ \ R>0, \theta_o \le z \le 2\pi +\theta_o \)

Now, let the transformation be \((R,Z) \to (X,Y)\),

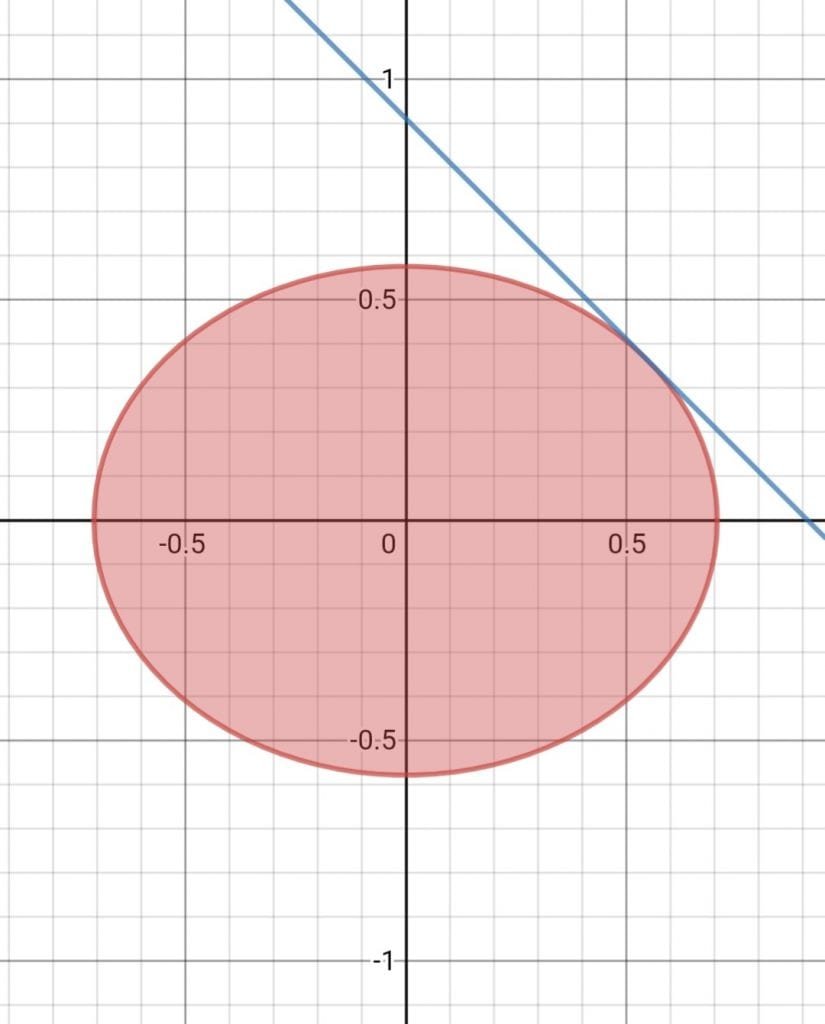

\(X=R\cos Z \\ Y=R\sin Z\), Also, here \(X,Y \in \mathbb{R}\)

Hence, \(R^2=X^2+Y^2 \\ Z= \tan^{-1} (\frac{Y}{X}) \)

Hence, verify the Jacobian of the transformation \(J(\frac{r,z}{x,y})=\frac{1}{r}\).

Hence, the joint pdf of \(X\) and \(Y\) is,

\(f_{X,Y}(xy)=f_{R,Z}(x^2+y^2, \tan^{-1}(\frac{y}{x})) J(\frac{r,z}{x,y}) \\ =\frac{1}{2\pi}exp(-\frac{x^2+y^2}{2})\) , \(x,y \in \mathbb{R}\).

Yeah, Now it is looking familiar right !!

Since, we need the distribution of \(Y=R\sin Z=R\sin(\theta+\theta_o)\), we integrate \(f_{X,Y}\) w.r.t to \(X\) over the real line, and we will end up with, the conclusion that,

\(R\sin(\theta+\theta_o) \sim N(0,1)\). Hence, We are done !!

Food For Thought

From the above solution, the distribution of \(R\cos(\theta+\theta_o)\) is also determinable right !! Can you go further investigating the occurrence pattern of \(\tan(\theta+\theta_o)\) ?? \(R\) and \(\theta\) are the same variables as defined in the question.

Give it a try !!

Similar Problems and Solutions

Related Program